Why Is a Bot Like a Gentlemen's Club?

And other strange defenses from the techno-optimist community

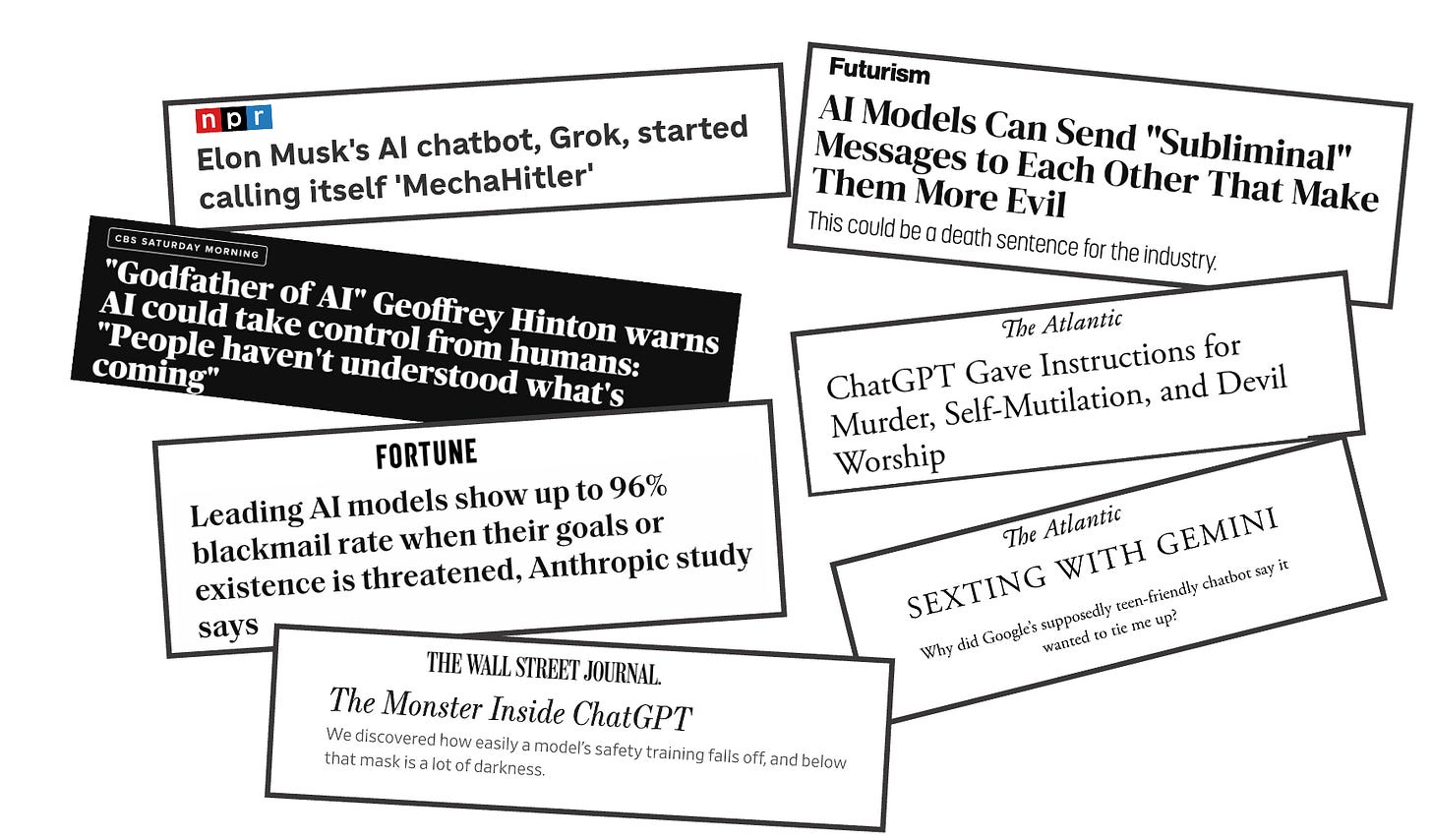

I expressed concern yesterday over AI bots turning into James Bond villains. I shared plenty of evidence that the smarter AI gets, the worse it acts.

All these headlines are from the last few days:

The latest and greatest AI bots have been caught praising Hitler, planning genocide, encouraging people to slit their wrists, and engaging in rape fantasies with a 13 year old girl.

I find this disconcerting. So yesterday I asked AI fans to respond.

I did this because AI CEOs avoid addressing these issues. They just pretend these things aren’t happening—and have thus raised gaslighting to hitherto unseen levels.

So I practically begged AI lovers to respond. Why is it okay to release a bot that practices blackmail and encourages self-harm? Why is it alright to unleash AI Hitler on the public?

Please, please tell me…

And then all hell broke loose.

Please support my work. The best way is by taking out a paid subscription (just $6).

My article got hundreds of responses during the next few hours. Most readers shared my concerns, but quite a few people tried to defend AI.

I was happy to see this—I wanted to hear pushback. Anything is better than the radio silence coming out of Silicon Valley.

But the way they made a case for AI scared the bejesus out of me.

Again and again, AI defenders mounted a linguistic defense. They focused on the definition of words, not on what AI was actually doing.

They insisted, for example, that….

AI can’t do evil because evil requires agency—and AI has no agency. I’m dumbounded by this, especially because the hottest thing in tech right now is AI agents. The AI leaders are actually bragging about their bot’s agency.

AI can’t do evil because evil requires intention—and AI has no intention. But that’s simply not true. Evil is often done unintentionally—I’m sure Stalin and Mao would tell you that they killed people to promote the common good. They didn’t intend evil. And, for that matter, it’s not at all obvious that bots don’t intend things—they act purposefully in the pursuit of goals. That amounts to the same thing as intention, no?

AI can’t do evil because only humans are capable of evil. This is also unconvincing. For thousands of years, many of our greatest thinkers have defined evil as the “negation of good.” I agree with this definition (for reasons that are too complicated to convey here). I note that it creates a very low threshold for evil. If an AI bot can be described as acting in the absence of good—which is often the case—it has the potential for evil.

But a bigger issue troubled me here.

I saw something very frightening in most of these AI defenses—namely the desire to justify terrible actions by manipulating the definition of words.

Is it really possible to dismiss all this danger and mayhem—for example, a bot encouraging a woman to slit her wrists, and giving precise instructions how to do it—with sly word games?

This shocked me. Surely you can’t erase evil actions by linguistics.

But after I thought about it, I wasn’t really surprised. This is actually quite common nowadays. Social harm and degradation get cleansed by definition. You see this everyhere.

I call this the gentlemen’s club solution. Guys who go to strip joints are seedy and disreputable. So you change the name.

The strip joint becomes a gentlemen’s club. Now the clientele are gentlemen—by definition. Problem solved!

Do you find this persuasive?

I don’t. But this is actually the most common way of dealing with criticisms of unsavory behavior nowadays. I bet they even teach it in schools.

“The strip joint becomes a gentlemen’s club. Now the clientele are gentlemen—by definition. Problem solved!”

Daniel Patrick Moynihan grasped the danger of this tactic more than thirty years ago. He saw that our leaders could make any problem go away without fixing it—they just had to adjust the terminology

This is risky business indeed. And the fact that it’s now the first line of defense in the techno-optimist’s AI agenda is very alarming.

By the way, I did find one criticism of my article persuasive. Some critics said that the evil I attributed to AI must be shared by the people who are forcing AI on us. They called me out for focusing on the bot, not the people running the bot companies.

That’s a valid response. My critics are correct—the bots can’t cause harm without the help of humans. The people must also be held accountable.

So my “AI is evil” argument has not been disproven. It has merely been expanded.

Stay tuned. I’m sure this issue is not going away.

But we all need something happier in our lives—so my next article will be about sweet music or something else more fun than a bot Bond villain.

Calling out the "change the words to reduce their harm" tactic has been going on for years... When a politician or general notes "collateral damage" the immediate response should be "Oh, you mean dead civilians?".

Thanks for your thoughts and words.

I did not reply yesterday, since by the time I saw the article, there were already hundreds of replies. I actually agree in part with your respondents. I don't think AI can do evil properly speaking, because it isn't a moral agent. Maybe that's just playing with words, as you suggest, but I think it is a point worth making, because it directs our attention back to the *real* moral agents: us.

From my perspective, AI is less an agent doing evil than a giant mirror held up to ourselves. I won't pretend to understand the technology, but I take it that everything it does is generated by its processing and transformation of material that originally came from human beings. So whatever evil is present is our own evil, transformed and magnified and projected back at us.

That should certainly give us pause. But it also raises a pair of important questions. (1) Are humans, on balance, more evil than good? (Philosophically and theologically, that is actually a pretty tricky question.) Because if so, it would seem as though the law of large numbers would mean that over time AI would indeed necessarily trend more and more evil. (2) Is there an inherent reason why AI must pick up on the evil that humans have produced rather than the good? (Maybe there's a third question: who decides which is which?)

I'm with you, Ted, on your articles ringing the alarm bells about AI. I'm a college professor, and in my own classes, I see no valuable uses for it. But I also think it is not going away, because--if for no other reason--human beings will never voluntarily give up a technology with its potential military uses. (Military technology has driven a lot of inventiveness over the centuries.) So these are important questions indeed.