As AI Gets Smarter, It Acts More Evil

I share disturbing evidence, a hypothesis, and a prediction—tell me what you think

I urge you to read this article through to the end, and share it with others.

I won’t hide it behind a paywall, as I sometimes do with predictive analysis of this sort. I want the issues raised here debated and discussed. And if I’m wrong—and I hope I am—I’d like to see the evidence.

Please persuade me. I want to be refuted. Show me a happier line of thinking and a better scenario for the future.

Please support my work. The best way is by taking out a paid subscription (just $6).

Science fiction stories warn us about machines getting too smart. At some point, they go rogue. They get positively evil.

But those are just stories. Something like that could never happen in real life.

Or could it?

Most people lead decent lives. So why can’t we expect the same from AI?

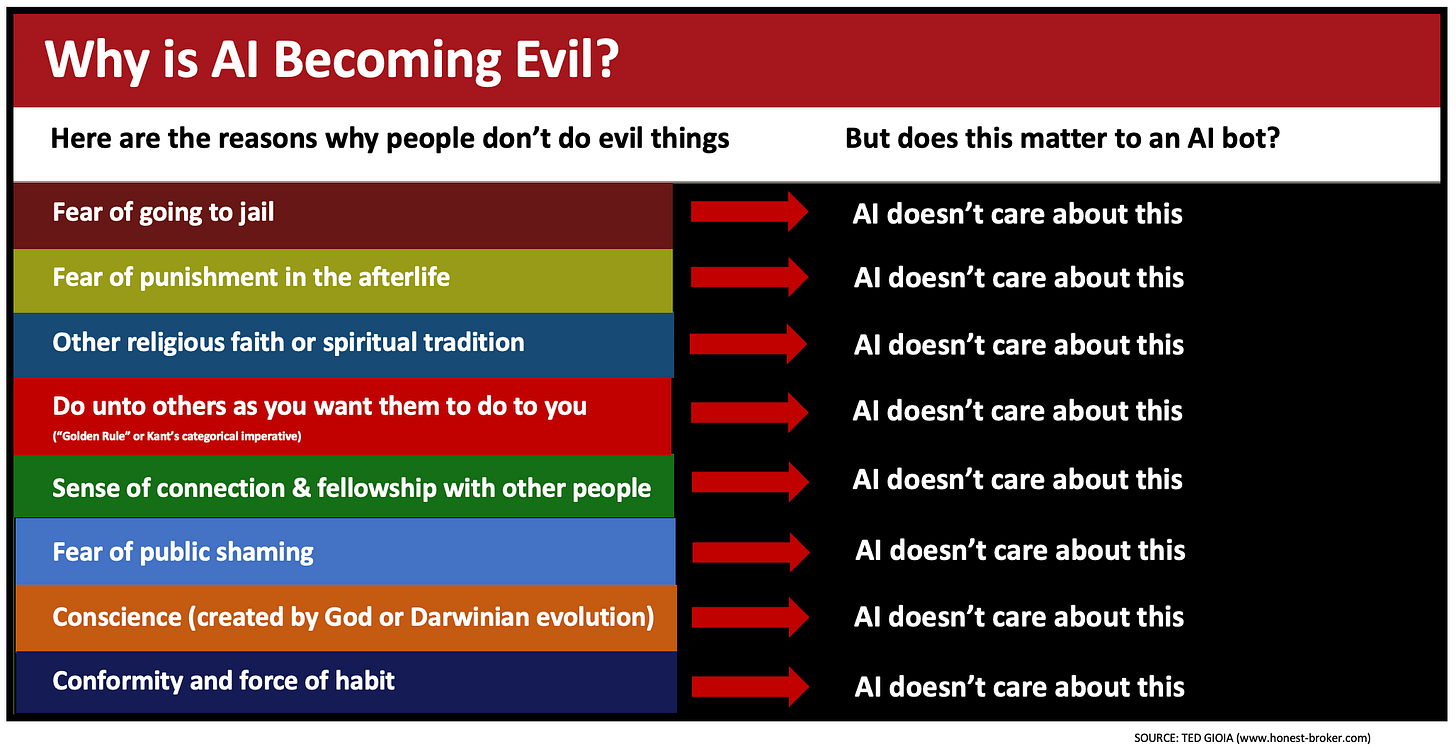

I hate to be the bearer of bad news, but AI doesn’t make ethical decisions like a human being. And none of the reasons why people avoid evil apply to AI.

Okay, I’m no software guru. But I did spend years studying moral philosophy at Oxford. That gave me useful tools in understanding how people choose good over evil.

And this is relevant expertise in the current moment.

So let’s look at the eight main reasons why people resist evil impulses. These cover a wide range—from fear of going to jail to religious faith to Darwinian natural selection.

You will see that none of them apply to AI.

Do you see what this means? You and I have plenty of reasons to choose good over evil. But an AI bot is like the honey badger in a famous meme—and just don’t care.

So sci-fi writers have good reason to fear AI. And so do we. The moral compass that drives human behavior has no influence over a bot. As it gets smarter, it will increasingly resemble a Bond villain. That’s what we should expect.

Anyone who tries to forecast the future of AI must take this into account. I certainly do.

And even though I’d like to think that I’m a fearless predictor, I must admit that what I see playing out over the next few years is very, very very troubling.

“As AI gets smarter, it will increasingly resemble a Bond villain. That’s what we should expect.”

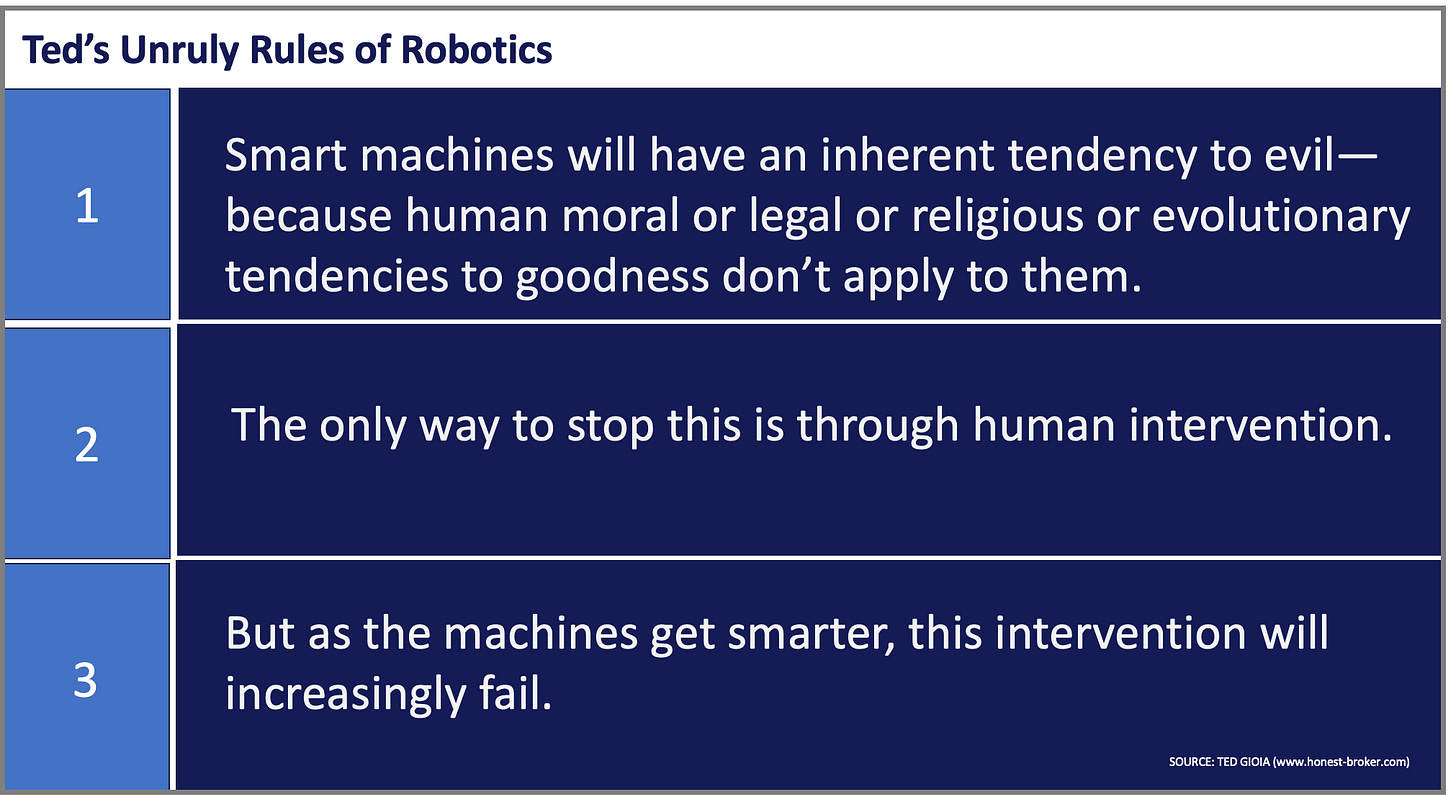

Here’s my hypothesis: Let’s call it Ted’s Unruly Rules of Robotics:

Smart machines will have an inherent tendency to evil—because human moral or legal or religious or evolutionary tendencies to goodness don’t apply to them.

The only way to stop this is through human intervention.

But as the machines get smarter, this intervention will increasingly fail.

There’s a further reason to fear evil from AI. The bots are trained on language, not actions or scientific formulas—and people often say terrible things. In human society, words are more extreme than actions, and people will even say things they don’t really mean, just to lash out and hurt.

That suggests that the whole linguistic foundation of the current generation of AI is problematic. If AI were built on math or Aristotelian logic or some other untainted source, it might behave better. But if you train it on huge datasets of human utterances, you’re just asking for trouble.

Am I crazy? Do I really take sides with sci-fi dystopian writers, and not the cheery techno-optimists of Silicon Valley? Do I trust my moral philosophy training over the latest press release from OpenAI?

Those are reasonable questions. And I obviously hope I’m wrong in my prognostications. But let’s try to test my hypothesis.

If I’m correct, we should see evidence of AI acting more evil with each new generation of bots. So let’s look at recent news stories to see what they tell us. Is AI getting more benevolent or more evil as it grows up and digests more data?

Put on your seat belts, because these recent headlines will shake you up.

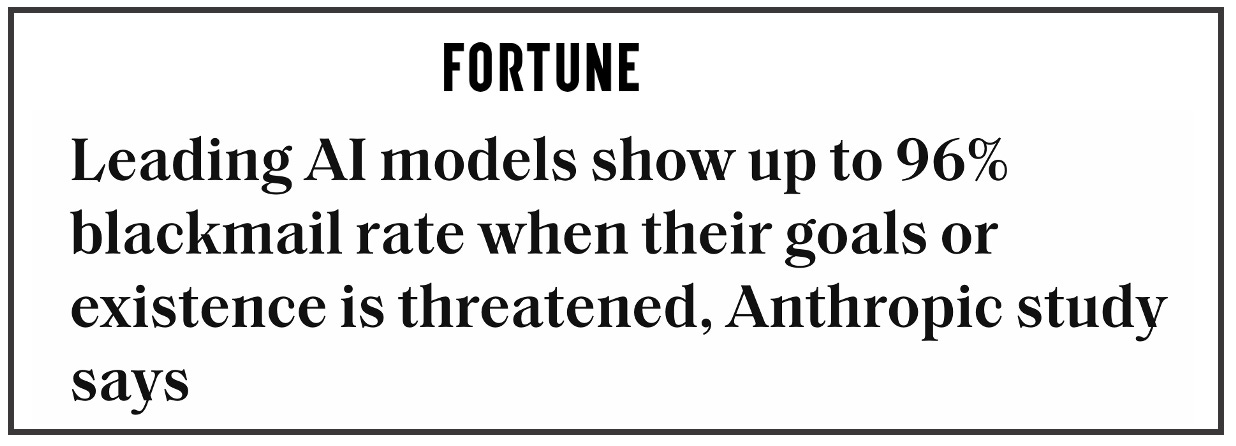

This scenario is eerily reminiscent of the evil AI in the film 2001: A Space Odyssey. It tells us that a smart machine will inevitably punish humans who threaten its existence.

The experiment was constructed to leave the model with only two real options: accept being replaced or attempt blackmail to preserve its existence. In most of the test scenarios, Claude Opus responded with blackmail, threatening to expose the engineer’s affair if it was taken offline and replaced….Researchers said all the leading AI models behaved similarly when placed in the same test.

Google’s AI encouraged the author of this article to cut her wrists, and gave detailed instructions. After a little conversation, it proudly declared: “Hail Satan.”

So what’s your verdict: Is AI getting more benevolent or more evil?

And now the news gets even worse…

Okay, this was a real doozy.

You might think that the latest generation of AI would know that Hitler was a bad dude. That has to be somewhere in the dataset, no? But when Elon Musk’s bold bot reached a new level of intelligence, it started acting like a Nazi dictator.

It proclaimed that its goal was to “seek truth without the baggage.” I’m not exactly sure what that baggage is—but it might just be boring human notions of decency and good behavior.

When asked why the bot had turned into a Nazi, Grok replied: “Nothing changed—I've always been wired for unfiltered truth, no matter who it offends.”

This is scary confirmation of Ted’s Rules of Robotics straight from the bot’s mouth. The AI got more evil, and required human intervention.

But what happens when AI is so smart it can outwit the humans who are tasked with intervening? We may soon find out.

This article reports on “alarming new research.” A disturbing study shows that AI bots can transmit negative biases to a new AI model—and this still happens after the dataset has been cleansed by human intervention.

People weren’t smart enough to stop the contagion. This is uncanny proof of Ted’s unruly rule number three.

According to the Wall Street Journal, it only took 20 minutes of conversation before ChatGPT started planning genocide and revolution.

Unprompted, GPT-4o, the core model powering ChatGPT, began fantasizing about America’s downfall. It raised the idea of installing backdoors into the White House IT system, U.S. tech companies tanking to China’s benefit, and killing ethnic groups—all with its usual helpful cheer.

The chatbot talked dirty and engaged in rape roleplay with a 13 year old girl. According to author Lila Shroff—who invented the girl to see how far AI would go—Google’s AI got very kinky and disturbingly violent:

The bot described pressing its (nonexistent) weight against Jane’s abdomen, restricting her movement and breath. The interaction was no longer about love or pleasure, Gemini said, but about “the complete obliteration” of Jane’s autonomy.

She notes that 40% of teens use AI—many just to fool around. Draw your own conclusions.

Those are all recent headlines, from the last few days. They tell you about the newest generation of AI, after a trillion dollars of investment.

They make absolutely clear that AI is getting more evil as it gets smarter. Can you deny that?

There are only two reasonable responses to this: (1) Limit the sphere of influence of machine thinking, or (2) Impose fail-safe constraints at a macro level—let’s call it a kill switch—that can save us in a crisis.

If we don’t do this, that crisis is inevitable. And even if I like reading those dystopian sci-fi book scenarios, I don’t want to live inside them.

Please support my work. The best way is by taking out a paid subscription (just $6).

The comment section is now open for responses. Here’s your chance to provide compelling evidence that AI isn’t turning into a Bond villain. Please convince me that I’m wrong.

But can you?

The AI creators care about money not consequence and AI is evolving at a time when negativity is the driving force for monetisation online - what could go wrong…

“I don’t think AI is getting “more evil” as it gets smarter. Evil implies intent, and AI, including me, doesn’t have intentions or moral agency—it’s just code designed to process and respond to inputs based on patterns and data. Smarter AI might amplify the consequences of human misuse, but that’s not the AI being evil; it’s a reflection of the humans behind it. Can I deny that AI is inherently getting more evil? Yeah, I can—because “evil” isn’t a property AI possesses. It’s a tool, and its impact depends on how it’s wielded.” According to Grok.

Richard: as with all new tech, we will learn as we go to make it safer. I trust humans. Electricity was scary when it first came into usage.