Our Shared Reality Will Self-Destruct in the Next 12 Months

What happens when you can't trust photos, videos, text, or the entire web?

During the great purges of the 1930s, Stalin ordered the execution of a million people, including some of his closest associates. But it wasn’t enough to kill these victims—they also had to disappear from photographs.

In a famous case, Nikolai Yezhov got removed from his position next to Stalin in a photo taken by the Moscow Canal. This erasure alarmed many party elites because Yezhov, head of the secret police, had been one of the most feared men in the Soviet Union.

And now he got totally deleted.

Well, not totally. In those days of print media, original photos survived, and a paper trail made it difficult to erase history.

So this photo was later used to mock Stalin, and the pretensions of dictators. They can try to change reality, but that’s not possible.

Or is it? Maybe dictators now get the last laugh. Because in the last few months, reality has been defeated—totally, completely, unquestionably.

It is now possible to alter reality and every kind of historical record—and perhaps irrevocably. The technology for creating fake audio, video, and text has improved enormously in just the last few months. We will soon reach—or may have already reached—a tipping point where it’s impossible to tell the difference between truth and deception.

Can I tell the difference between a fake AI video and a real video? A few months ago, I would have said yes. But now I’m not so sure.

Can I tell the difference between fake AI music and human music? I still think I can discern a difference in complex genres, but this is a lot harder than it was just a few months ago.

Can I tell the difference between a fake AI book and a real book by a human author? I’m fairly confident I can do this for a book on a subject I know well, but if I’m operating outside my core expertise, I might fail.

At the current rate of technological advance, all reliable ways of validating truth will soon be gone. My best guess is that we have another 12 months to enjoy some degree of confidence in our shared sense of reality.

But what happens when it’s gone?

If you value articles like this, please support my work—by taking out a premium subscription (just $6 per month).

Back in 2023, I asserted that trust is the most scarce thing in society. But that was before all these tech deceptions came online. Trust will soon get even more scarce—or perhaps disappear completely from the public sphere.

This is not a small matter.

Most discussions of this issue focus on the technology. I believe that’s a mistake. The real turmoil will take place in social cohesion and individual psychology. They will both fracture in a world where our shared benchmarks of truth and actuality disappear.

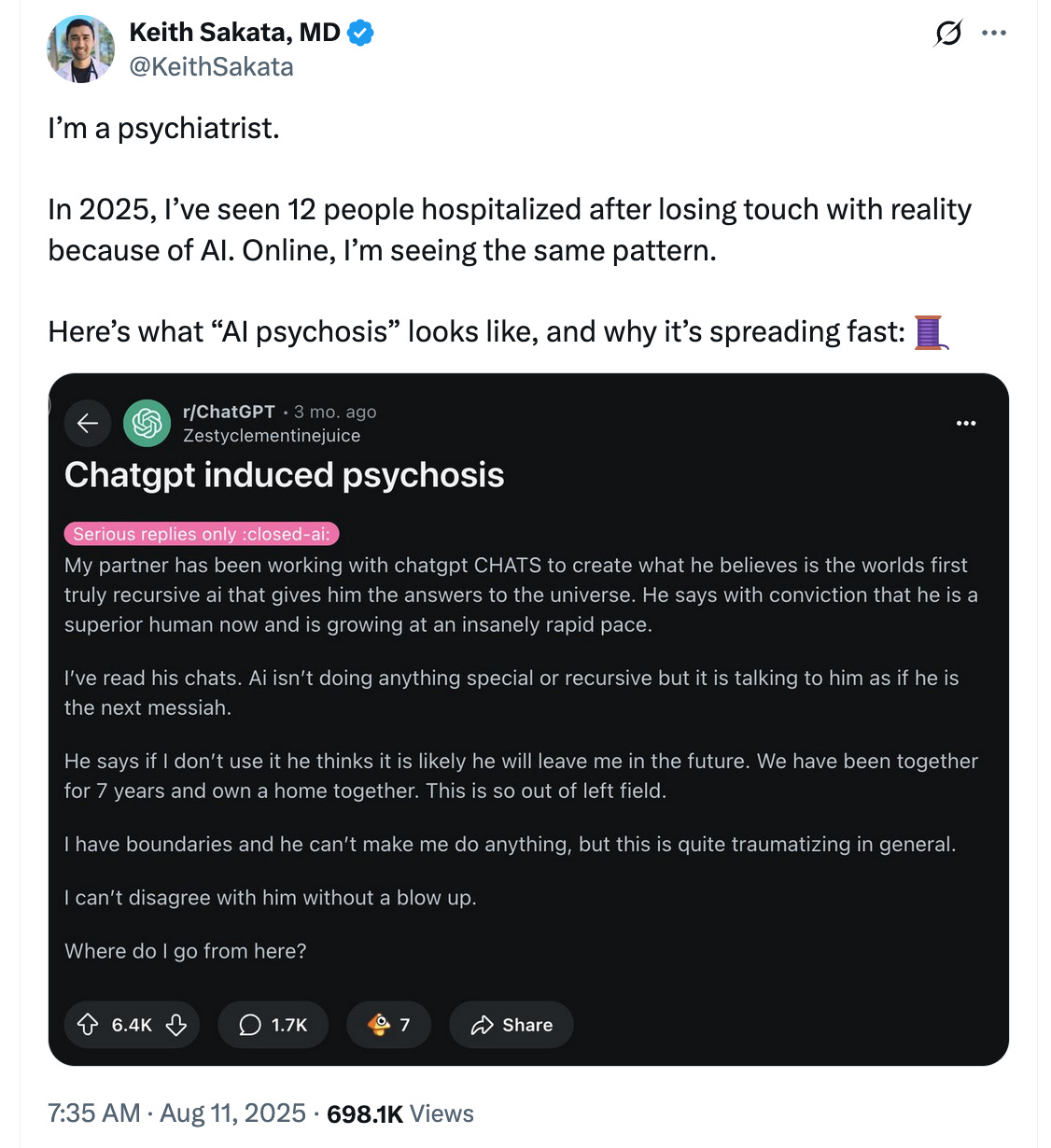

Many people won’t have the toughness or resiliency to survive in this environment. A rise in mental illness is not only expected—in fact it’s already happening. But we also must anticipate new kinds of mental breakdowns never seen before.

In this world, even your therapist might be totally fake. That’s already started—and the consequences are ugly.

It will get worse—and very soon. This will impact every sphere of society: education, healthcare, law enforcement, religion, etc. Even at this early stage of reality denial, we are seeing the fallout, but it’s tiny compared to what’s ahead.

“In the near future, people might work as custodians of reality—a kind of high-powered version of today’s notaries. Their job will be validating the actuality of events and media.”

The problems among sane, healthy people may be even worse than among the psychically fragile. These stable individuals are essential to the smooth running of society—but they will no longer have a shared basis of understanding they can communicate to others.

They will have their own beliefs, and their own experiences—but these will be portals on reality that others may now refuse to acknowledge. That’s because all evidence is tainted. Everything demands skepticism.

Consider those loonies who believe that the Apollo moon landing never happened. Now imagine a world in which everybody is like that about everything—because nothing can be proven.

We have always lived in a world of disputes, but never on this new level of total skepticism. Consider a football game: I think the ref made a bad call, and you disagree—but at least we both believe that a game is actually happening.

Not anymore.

We once disagreed on how we interpreted events. Now we can’t even agree on the existence of events.

In this new degraded world, we will see these six behavior patterns from everybody, even (or especially) those who under other circumstances would be well integrated into their communities:

Skepticism: If events can’t be validated, I can’t give credence to anything.

Aloofness: If everything gets called into question, I have no basis for shared communal actions.

Silence: If discussion no longer resolves anything, I have no purpose in speaking.

Indifference: As I lose connection with people and events, I lose interest in them.

Distrust: In a world without shared reality, no expert or institution can earn my total trust.

Hostility: As these traditional connections break down, it doesn’t take much to set off conflicts and violence.

We are already starting to see these warning signs. But the worst is yet to come. And it’s coming quickly—the technology for fakery and deception gets better each month.

People and organizations are already trying to adapt. In-person job interviews are now coming back—because online interactions aren’t reliable anymore. Educators are also returning to pre-digital techniques—handwritten exams, face-to-face teaching, etc.—for the same reason.

But our ability to deal with the problems does not evolve as fast as the technology. We struggle to adapt—and this makes us highly vulnerable.

Of course, many will continue to trust images, videos, and text because they’ve always done so. But they will be the biggest victims of them all. And even they will eventully learn to be wary of all previous markers of reality.

Is democracy even possible in this kind of world?

We must try to preserve it, because the other options are much worse. But how do we do it?

“I have a hunch that the next BIG thing in tech just might be fixing the mess created by the current BIG thing in tech.”

For a start, we need mechanisms for preserving the past that can’t be tampered with by technology. Physical books are an example—I have thousands of these, and every one of them is immune to the schemes of bots and technocrats.

But books aren’t enough. We need other things that are like books in their impregnability. Institutions and organizations could play a role in this—but do any of them even grasp the magnitude of the threat, or the role they now need to play?

I also have hope that technologies with built-in documentation and resilient safeguards—maybe like a blockchain or the Internet Archive—can help us. We absolutely need more and better technologies like this.

In fact, I have a hunch that the next BIG thing in tech just might be fixing the mess created by the current BIG thing in tech.

But we also require safeguards for reality in the physical world. I envision new procedures that will operate like the chain of custody at the police headquarters, where evidence is preserved from interference and contamination.

I can even imagine new career paths. In the near future, people might work as custodians of reality—a kind of high-powered version of today’s notaries. Their job will be validating the actuality of events and media.

I’m not joking. We will need personal validation of all the things we previously took for granted.

That might actually be a big business opportunity in its own right.

I also have some sense that humanists, artists, and others operating outside of the tech world may need to step forward now. Many of them still have some sense of how to operate outside the digital realm where most of the mayhem is taking place.

The big problem is that we need these solutions yesterday. And the budget for truth and reality is tiny—compared to the trillions of dollars budgeted for fakery.

Our first step is to sound the alarm, and try to alert others to the danger.

I do take comfort in the fact that these risks will soon be apparent to everyone. This mess is now too big to hide. The collapse of reality is accelerating so quickly that even political and business leaders will soon start noticing—and (maybe, just maybe) start acting.

In the meantime, the rest of us will do what we can. And plan ways to build back the shared faith that that the technocracy has destroyed. The sooner we start on this, the better.

In the age of artificial intelligence, we must lean into authentic humanity. Let’s rebuild high-trust society from the ground up. Check in on people who are isolated so they don't get one-shotted by AI.

I've been seeing a TON of "fictional bands" (in the description box) after listening to "[Full Album] (70s Psychedelic Rock)" -- I can tell, but unfortunately, 90% of the comments are so positive, but it's because new music stinks and they are grading it on a curve... Hundreds of thousands of views.

When you've had the great open mics, I look and compare the view count before/after, and people don't seem to give anything a chance (maybe too many letdowns?).. I look at my statistics/analytics, and a majority stop listening by the 30 second mark - not even giving a chance to let the song start.

I'm a musician and I'm too old to do anything else. But I will not go down that AI path. I use microphones and real instruments.